As artificial intelligence continues to advance, the emergence of deepfake technology has raised significant policy concerns. In 2025, numerous advancements in legislation aimed to combat the misuse of such technology, particularly in its application to non-consensual and misleading content. This article summarizes key developments in deepfake regulations from the past year and outlines future expectations for 2026.

Key Takeaways:

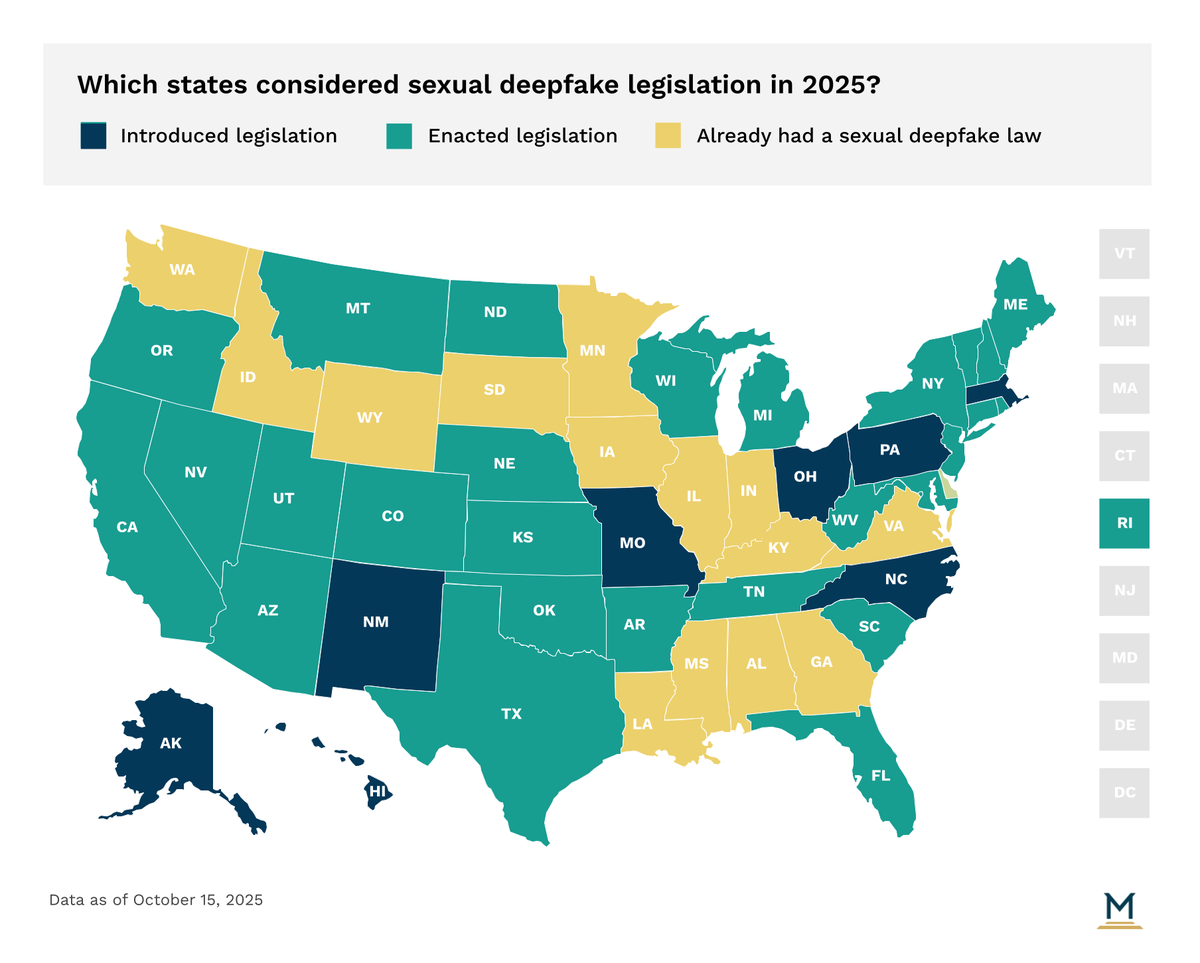

- In 2025, legislators across the nation introduced various sexual deepfake laws targeting non-consensual content and child sexual abuse material generated with AI.

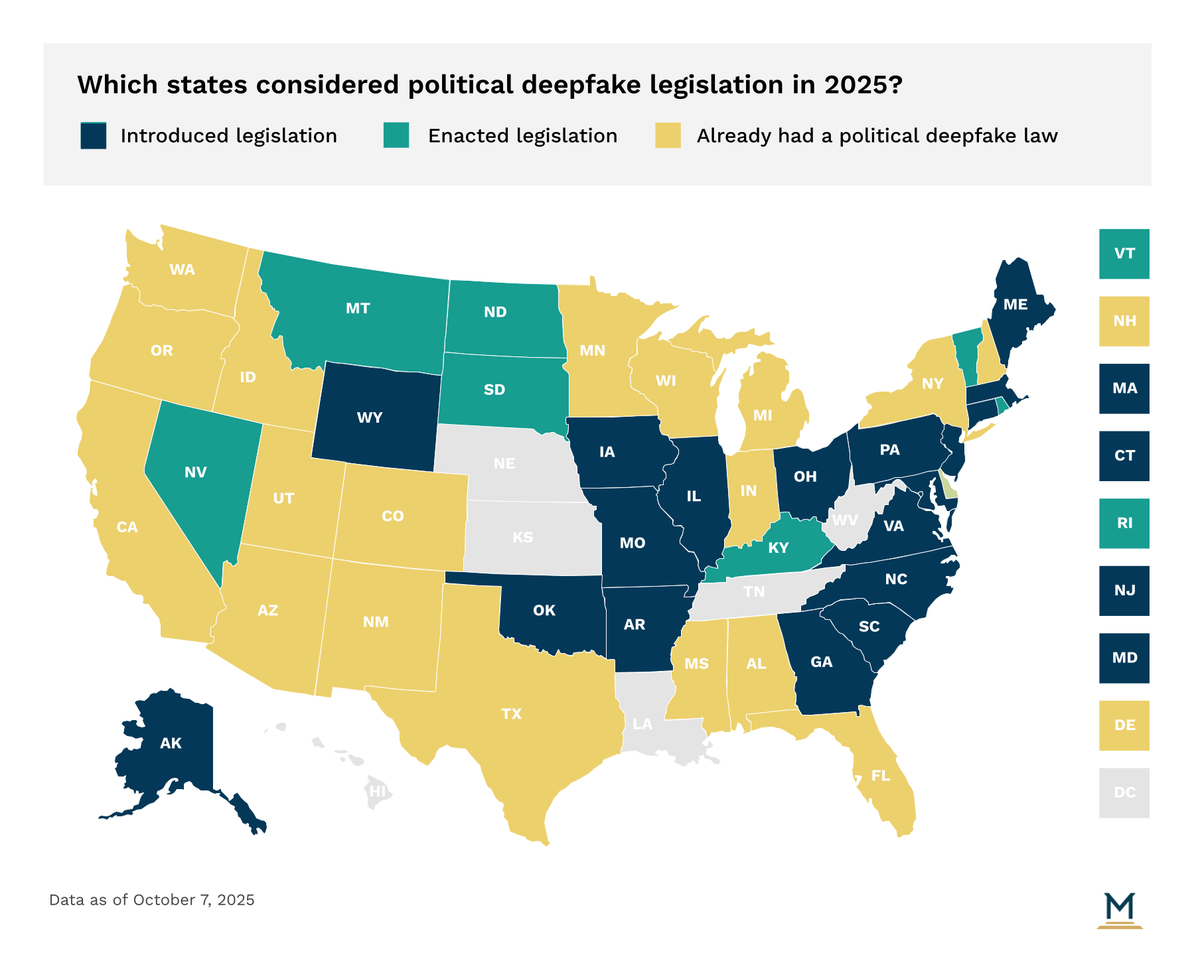

- The scope of state AI deepfake legislation has expanded to include political deepfake regulations, which mandate disclaimers on digitally altered content in campaign advertisements; however, several of these laws face constitutional challenges related to free speech.

- Federal laws, such as the Take it Down Act, now compel online platforms to remove AI-generated content that violates laws, particularly non-consensual sexual deepfakes.

- Looking ahead to 2026, legislation targeting non-consensual deepfakes is expected to extend beyond individual creators to include generative AI platforms, payment processors, and hosting services that facilitate the production and distribution of deepfake content.

At the end of each year, our policy analysts provide insights into the most pressing issues debated in state legislatures. Here are the significant developments and overarching trends observed in the deepfake policy landscape throughout 2025, along with expectations for the upcoming year.

Deepfake Legislative Developments in 2025

Regulation of Sexual Deepfake Content

While generative AI tools enable creative manipulation of images and videos, they can also victimically place individuals’ likenesses into inappropriate contexts, potentially harming their reputations. Instances where high school students have been targeted by sexual deepfakes have prompted lawmakers in every state to introduce legislation aimed at addressing the distribution of such harmful content. These bills vary, focusing on non-consensual sexual deepfakes, child sexual abuse material (CSAM), or a combination of both.

Political Deepfake Advertising Requirements

Deepfake technology has also found its way into political communications. In 2024, a U.S. Senate candidate used a digitally altered advertisement that depicted their opponent holding a sign that she never actually held. In response, lawmakers have sought to mandate disclaimers on political ads that incorporate digitally altered content, ensuring viewers understand that the material may not be genuine.

Constitutional Challenges to Deepfake Laws

Despite the progress in enacting deepfake regulations, challenges arise from constitutional protections surrounding free speech. In California, a federal judge has already annulled a state law, citing it was overly broad and biased against specific content, rather than being narrowly focused on “false speech that results in legally recognizable harms.” Another California law was eliminated for prohibiting online platforms from hosting deceptive political deepfake content concerning an election cycle.

Future Regulatory Approaches for 2026

As we move toward 2026, legislators are anticipated to extend their focus beyond penalizing individual creators and distributors of deepfake content. Future regulations may encompass those entities that facilitate its creation and distribution, including generative AI platforms, payment processors, hosting services, and cloud providers. The recent Take it Down Act, implemented earlier in 2025, obligates online platforms to eliminate non-consensual sexual content that has been altered or generated by AI. There may also be legislative efforts to mandate watermarks, digital signatures, or cryptographic provenance tags on AI-generated media, potentially coordinated under standards established by the National Institute of Standards and Technology (NIST) or the Coalition for Content Provenance and Authenticity (C2PA).

Influence on Technology Policy

Technology policy affects nearly every industry, and state policymakers are becoming increasingly proactive in addressing this arena. At MultiState, our team is well-versed in the issues at hand along with the key players and organizations involved. We leverage our expertise to assist clients in effectively navigating their policy priorities. Our customized strategic solutions facilitate the development and implementation of a proactive multistate agenda aligned with your company’s objectives. To learn more, visit our Tech Policy Practice.

In conclusion, the evolution of deepfake technology necessitates ongoing legislative attention to safeguard individuals and communities from its potentially harmful applications. As policymakers adapt to these challenges, the landscape of deepfake regulations is likely to evolve further, ensuring a more secure digital environment for all.