This is Naked Capitalism fundraising week. 735 donors have already invested in our mission to counter corruption and exploitative practices, particularly within the financial sector. We invite you to contribute through our donation page, where you can find options for donating via check, credit card, debit card, PayPal, Clover, or Wise. Discover more about the purpose of our fundraiser, our achievements over the past year, and our current goal, to support our expanded daily Links

Welcome, readers. The following analysis by Servaas Storm highlights how Artificial Intelligence (AI) continues to fall short of its extravagant promises. Despite the influx of cash and computational power, as the author suggests, we are witnessing a significant disconnect between expectation and reality. Storm’s insights present a critical examination of this ongoing AI hype, which he relabels as “Artificial Information.”

By Servaas Storm, Senior Lecturer of Economics, Delft University of Technology. Originally published at the Institute for New Economic Thinking website.

This paper contends that (i) we have reached “peak GenAI” regarding current Large Language Models (LLMs); further investments in data centers and chips will not yield the desired breakthrough in “Artificial General Intelligence” (AGI); returns are diminishing rapidly; (ii) the AI-LLM industry, alongside the broader U.S. economy, is in a speculative bubble that is on the verge of collapsing.

The United States is currently experiencing an extraordinary AI-driven economic boom. The stock market has surged, buoyed by inflated valuations of tech firms involved in AI, which are contributing hundreds of billions to economic growth through investments in data centers and other AI infrastructure. This AI investment frenzy is rooted in the belief that AI will significantly enhance productivity for both companies and workers, leading to unprecedented corporate profits. However, by the summer of 2025, optimism among generative Artificial Intelligence (GenAI) enthusiasts highly influenced by promises from figures like OpenAI’s Sam Altman—that AGI is just around the corner—began to sour.

Let’s take a closer look at the hype. As early as January 2025, Altman proclaimed strong confidence in achieving AGI. This optimism was echoed by OpenAI’s partner and major investor Microsoft, which released a paper in 2023 stating that the GPT-4 model was already showing “signs of AGI.” Elon Musk also expressed similar certainty in 2024, claiming his xAI-developed Grok model would achieve AGI—intelligence greater than humanity’s—by 2025 or 2026. Meanwhile, Meta CEO Mark Zuckerberg assured that his company was dedicated to achieving “full general intelligence,” stating that super-intelligence was in sight. Likewise, Dario Amodei, co-founder and CEO of Anthropic, suggested that “powerful AI”—smarter than Nobel laureates—might emerge as early as 2026, ushering in an era of unprecedented abundance—with the caveat that AI could also pose existential risks.

In the eyes of Musk and his GenAI counterparts, the primary roadblock to AGI is the insufficient computing power required to train AI models, stemming from a lack of advanced chips. According to Morgan Stanley, the demand for enhanced data and processing capabilities will necessitate around $3 trillion in investment by 2028—a figure that exceeds the capacity of global credit and derivatives markets. Driven by a desire to outperform China in AI, proponents believe the U.S. can achieve AGI by rapidly expanding data center infrastructure, an unmistakably “accelerationist” perspective.

Interestingly, AGI is poorly defined and may serve more as a marketing tool for AI promoters to attract funding. Fundamentally, AGI posits that a model can generalize beyond specific examples presented in its training data, akin to how some humans can adapt to various tasks after seeing only a few examples, learning and adjusting methods as needed. AGI systems are envisioned to outsmart humans, devise novel scientific concepts, and execute both innovative and routine coding tasks. They promise to illuminate new medical solutions for diseases like cancer, address climate change, drive autonomous vehicles, and optimize agricultural production. The potential disruption AGI could cause extends well beyond workplaces to sectors such as healthcare, energy, agriculture, communications, entertainment, transportation, R&D, innovation, and science.

OpenAI’s Altman famously claimed that AGI could “discover new scientific principles,” asserting, “I think we’ve cracked reasoning in our models,” while also acknowledging, “we’ve still got a long way to go.” When he announced the launch of ChatGPT-5 in August, Altman proclaimed that users would find the model “far more useful, smarter, and intuitive,” likening it to conversing with “an expert” capable of aiding any user goal.

However, the situation began to unravel swiftly.

ChatGPT-5 Is a Letdown

The first sign of trouble appeared with ChatGPT-5, which turned out to be a disappointment—a collection of minor improvements encased in a routing architecture that failed to deliver the groundbreaking advancements Altman had led people to expect. User experiences have been lackluster. The MIT Technology Review reported: “While the release included several enhancements to the ChatGPT experience, it still falls short of AGI.” Alarmingly, internal assessments by OpenAI revealed that GPT-5 “hallucinates”—producing incorrect or fabricated information—about one in ten times on factual queries when online. Without internet access, GPT-5 faltered with nearly 50% of its answers being inaccurate—a serious concern. Even more troubling is the notion that such ‘hallucinations’ may reflect biases woven into the training datasets, which could result in AI generating misleading crime statistics that align with societal biases.

AI chatbots are increasingly utilized to disseminate misinformation (see here and here). Research indicates that these chatbots produce false claims in response to controversial news topics 35% of the time—almost double the rate from the previous year (here). By curating, structuring, and filtering information, AI shapes public interpretation and debate while favoring predominant perspectives, often suppressing alternative viewpoints or fabricating convenient narratives. A pressing question remains: Who governs these algorithms? Who establishes the rules for tech advancements? The ability of GenAI to foster systematic biases and misinformation implies significant societal consequences and risks that must be considered in evaluating its broader impact.

Constructing Larger LLMs Is Leading Nowhere

The challenges faced by ChatGPT-5 cast doubts on the GenAI industry’s strategy of scaling up LLMs. Critics, including cognitive scientist Gary Marcus, have posited that merely expanding LLMs will not facilitate the development of AGI, and GPT-5’s shortcomings reinforce those claims. A growing body of understanding highlights that LLMs aren’t built upon solid world models. Instead, they work primarily as sophisticated pattern-matching systems—hence their inability to even competently play chess, consistently making errors that baffle users.

My recent INET Working Paper delves into three compelling research studies indicating that increasingly larger GenAI models do not yield improved performance; rather, they regress, lacking true reasoning capability and simply mimicking reasoning-like text. For instance, a recent study from MIT and Harvard illustrates that LLMs, even when trained on comprehensive physics data, do not uncover fundamental principles within that data. Vafa et al. (2025) interviewed found that LLMs can accurately predict a planet’s next position in orbit but fail to grasp the underlying explanation of Newton’s Law of Gravity. They construct arbitrary rules that permit them to make accurate predictions without actually uncovering the underlying forces at play. Shockingly, LLMs cannot deduce physical laws from their training data, nor can they effectively discern relevant information from the internet. When uncertain, they tend to fabricate answers.

Worse, AI systems are incentivized to guess rather than admit ignorance, an issue recognized by OpenAI’s researchers in a recent study. Guessing is deemed acceptable—because, who knows, it might be correct. This pervasive error is currently unaddressable. Thus, it may be wiser to consider the acronym AI as standing for “Artificial Information” rather than “Artificial Intelligence.” Ultimately, the clear takeaway is that this poses significant challenges for individuals hoping that scaling LLMs would lead to improved outcomes (see also Che 2025).

95% of Generative AI Pilot Projects in Companies Are Failing

Corporations rushed to announce AI initiatives to bolster their stock prices, but reports started circulating that AI tools weren’t delivering as promised. An August 2025 report titled The GenAI Divide: State of AI in Business 2025, published by MIT’s NANDA initiative, revealed that a staggering 95% of generative AI pilot projects are failing to generate revenue growth. As detailed by Fortune, “generic tools like ChatGPT stall in enterprise use due to the lack of adaptation to workflows.”

Many companies have begun reversing their AI staffing strategies after laying off workers in favor of AI. For example, the Swedish firm “Buy Burritos Now, Pay Later” Klarna boasted in March 2024 that its AI assistant was replacing 700 laid-off employees but was forced to rehire them as gig workers in summer 2025 (see here). Similar stories surfaced from IBM, which had to rehire staff after making approximately 8,000 workers redundant due to automation (see here). Recent U.S. Census Bureau data indicates that AI adoption has been decreasing among companies with over 250 employees.

MIT economist Daren Acemoglu (2025) predicts limited productivity benefits from AI within the next decade and warns of possible negative social consequences from certain AI applications. “We will still need journalists, financial analysts, and HR employees,” Acemoglu mentions. “AI will impact a small subset of office roles centered on data summarization and pattern recognition—representing only about 5% of the economy.” Similarly, recent studies analyzing AI adoption among 25,000 workers in Danish industries show that GenAI technologies have had negligible effects on earnings and recorded work hours, with productivity gains averaging just 3%—a troubling indication of the supposed revolutionary impact of these AI systems.

Even predictions that tech roles will become obsolete due to GenAI are proving inaccurate. OpenAI researchers found earlier this year that advanced AI models like GPT-4o and Anthropic’s Claude 3.5 Sonnet still cannot rival human programmers. These AI systems struggle to recognize widespread bugs or their context, resulting in incomplete or erroneous solutions. Another study by the nonprofit Model Evaluation and Threat Research (METR) found that programmers using AI tools during early 2025 experienced a slower pace, spending 19% more time thanks to AI assistance rather than becoming more efficient (see here).

The U.S. Economy at Large Is Hallucinating

The lackluster release of ChatGPT-5 highlights questions regarding OpenAI’s capacity to create and market viable consumer products. However, the implications extend beyond OpenAI; the American AI industry was primarily founded on the belief that AGI is imminent. Sufficient “compute” resources—such as vast numbers of Nvidia AI GPUs and expansive data centers—are presumed necessary to establish the semblance of “intelligence.” This notion breeds the idea that “scaling” investments in billions of dollars for chips and data centers is the singular path forward, a strategy that has become second nature to tech firms, venture capitalists, and Wall Street financiers, particularly regarding deploying resources for AGI and expanding infrastructure for anticipated AI demand.

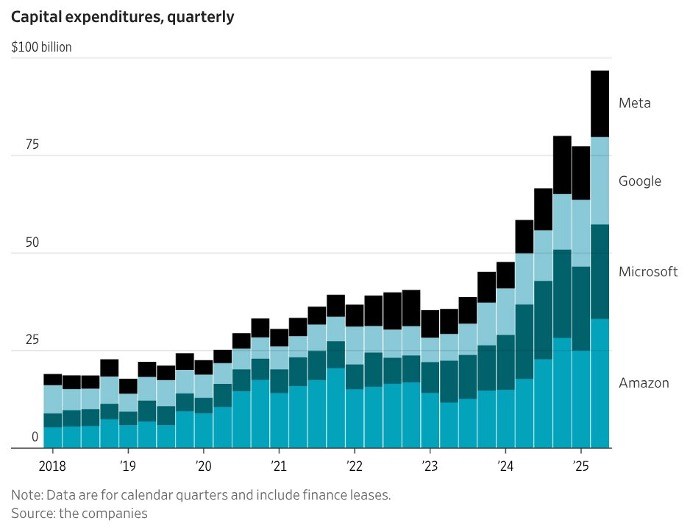

In 2024 and 2025, major tech companies poured approximately $750 billion into data centers, with plans to allocate an astonishing $3 trillion from 2026 to 2029 (Thornhill 2025). The so-called “Magnificent 7” (Alphabet, Apple, Amazon, Meta, Microsoft, Nvidia, and Tesla) invested over $100 billion in data centers in the second quarter of 2025; Figure 1 illustrates the capital expenditures from four of these corporations.

FIGURE 1

Christopher Mims (2025), https://x.com/mims/status/1951…

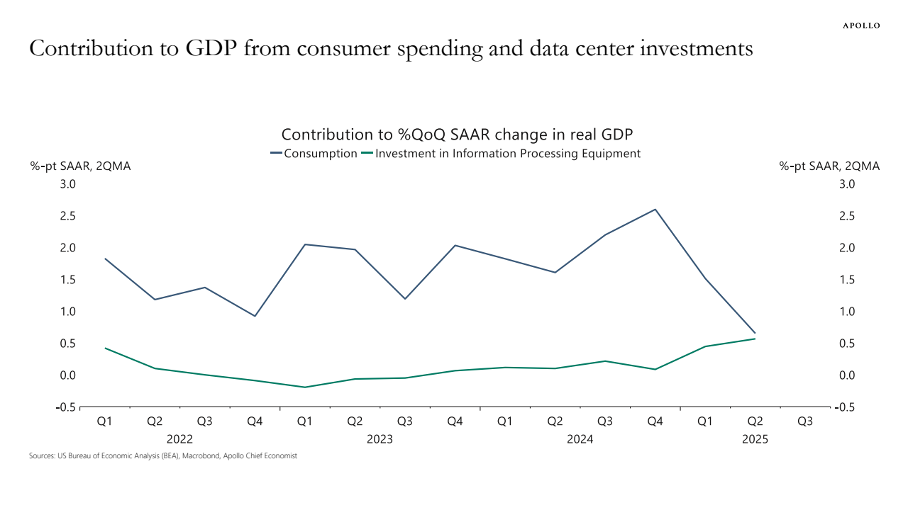

The surge in corporate investment in “information processing equipment” has been immense. According to Torsten Sløk, chief economist at Apollo Global Management, data center investments’ contributions to sluggish real U.S. GDP growth have paralleled those of consumer spending during the first half of 2025 (Figure 2). Financial investor Paul Kedrosky notes that capital expenditures directed towards AI data centers in 2025 surpassed those seen during the telecom boom of the dot-com bubble (1995-2000).

FIGURE 2

Source: Torsten Sløk (2025). https://www.apolloacademy.com/…

Following the hype surrounding AI, tech stocks have experienced significant gains. The S&P 500 index saw an approximate 58% increase between 2023 and 2024, primarily propelled by the soaring stock prices of the Magnificent Seven. The average share price of these companies surged by 156% from 2023 to 2024, while the remaining 493 firms averaged only a 25% increase. Consequently, the U.S. stock market’s recent exuberance has been largely driven by AI.

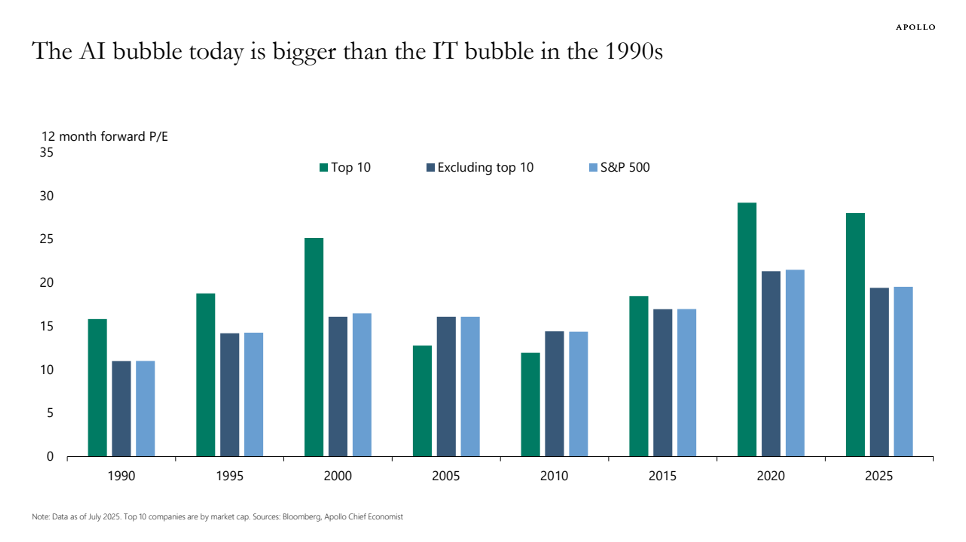

Nvidia’s shares surged over 280% in the past two years due to skyrocketing demands for its GPUs from AI developers. As one of the most notable beneficiaries of the increasing AI demand, Nvidia now boasts a market capitalization exceeding $4 trillion, marking the highest valuation recorded for any publicly traded company. Yet, does this valuation hold weight? Nvidia’s price-earnings (P/E) ratio peaked at 234 in July 2023 and has subsequently declined to 47.6 in September 2025 — a figure still considered historically elevated (see Figure 3). Nvidia is selling its GPUs to various neocloud companies (such as CoreWeave, Lambda, and Nebius), financed by loans from Goldman Sachs, JPMorgan, Blackstone, and other Wall Street entities, secured by data centers stashed with GPUs. In critical instances, Ed Zitron suggests that Nvidia extended offers for its unsold cloud computing capacity worth billions, effectively insulating its clients—largely based on the premise of an AI revolution that has yet to materialize.

Similarly, Oracle Corp., which is not included in the “Magnificent 7,” saw its stock price rise more than 130% between mid-May and early September 2025 after announcing a $300 billion cloud-computing infrastructure deal with OpenAI. Oracle’s P/E ratio climbed to nearly 68, suggesting investors are willing to pay almost $68 for every dollar of Oracle’s projected earnings. A significant concern arises from this deal, as OpenAI lacks the funds for the promised $300 billion; the company incurred losses of $15 billion from 2023 to 2025 and anticipates a further cumulative loss of $28 billion from 2026 to 2028 (see below). It remains unclear how OpenAI intends to meet its financial obligations. Oracle faces the risk of possessing costly AI infrastructure without alternative clients should OpenAI fail to fulfill its contractual commitments, especially if the AI hype subsides.

It is evident that tech stocks are overvalued. Torsten Sløk, chief economist at Apollo Global Management, warned in July 2025 that AI stocks may be even more inflated than those during the dot-com bubble in 1999. In a recent article, he highlights that the P/E ratios for Nvidia, Microsoft, and eight other tech firms exceed those of the dot-com era (see Figure 3). Many remember how the dot-com bubble deflated — thus, Sløk’s warnings regarding the current market frenzy driven by the “Magnificent 7” heavily invested in the AI sector warrant attention.

However, Big Tech does not operate data centers directly; they are constructed by contractors and then sold to operators who lease them to companies like OpenAI, Meta, or Amazon (see here). Wall Street private equity firms such as Blackstone and KKR invested billions to acquire these operators, through funding based on commercial mortgage-backed securities. Data center real estate has become a new, hyped asset class dominating financial portfolios. Blackstone has termed data centers one of its “highest conviction investments.” The lease contracts with AAA-rated clients such as AWS, Microsoft, and Google appeal to Wall Street investors due to their long-term, stable income. Some analysts caution about potential oversupply in data centers, yet proponents insist that “the future will be GenAI”—suggesting a blind faith in continued growth.

FIGURE 3

Source: Torsten Sløk (2025), https://www.apolloacademy.com/…

The pressing question remains: How long will investors continue to uphold the inflated valuations of key players in the GenAI race? Despite ongoing expenditures reaching the tens of billions of dollars for data center expansions, earnings generated by the AI industry still lag substantially. According to an optimistic S&P Global research report released in June 2025, the GenAI market is projected to reach $85 billion in revenue by 2029. However, Alphabet, Google, Amazon, and Meta are collectively expected to spend nearly $400 billion on capital expenditures in 2025 alone. Meanwhile, the combined revenue of the AI sector barely exceeds that of the smart-watch market (Zitron 2025).

What if GenAI just isn’t profitable? This question weighs heavily given the diminishing returns on the soaring investments directed toward GenAI and data centers, compounded by the disappointing results from the majority of firms that have adopted AI. One of the largest hedge funds in Florida, Elliott, informed clients that the AI landscape is overhyped and that Nvidia is in a bubble, emphasizing that numerous AI products “will never be cost-efficient, will not function reliably, will incur excessive energy costs, or will prove untrustworthy.” “Real applications are limited,” it noted, mainly revolving around “summarizing meeting notes, generating reports, and aiding in coding.” Elliott also expressed skepticism regarding Big Tech firms maintaining their substantial GPU purchases.

Locking billions into AI-specific data centers without clear exit strategies risks building systemic financial hazards within the economy. With data center investments fueling U.S. economic growth, the country has become overly reliant on a select few corporations that have yet to produce a dollar of profit from the ‘compute’ accomplished within these investments.

America’s High-Stakes Geopolitical Gamble

The current AI boom (or bubble) has emerged with bipartisan support in the U.S. The collective vision of American companies achieving advancements in AI, particularly AGI, prevails—emphasizing the importance of the U.S. leading the global AI race. However, this ambition critically hinges on various potentially adversarial nation-states, including China. In this context, achieving AGI first is viewed as a strategic advantage: if the U.S. secures AGI before anyone else, it could establish a formidable geopolitical advantage, particularly over China (see Farrell).

This is why Silicon Valley, Wall Street, and the Trump administration are intensely committed to the “AGI First” initiative. But astute observers worry about the costs and risks associated with this strategy. Prominently, Eric Schmidt and Selina Xu forecasted in the New York Times that “Silicon Valley has become so fixated on achieving this goal [AGI] that it is alienating the general public and, worse, bypassing opportunities to leverage existing technology. By prioritizing this aim alone, our nation risks falling behind China, which is more focused on implementing current technology rather than aspiring to create superior AI.”

Schmidt and Xu’s concerns are warranted. OpenAI leader Sam Altman’s vision of placing data centers in space—“Perhaps we could construct a large Dyson sphere around the solar system, as it would make more sense than positioning these on Earth”—paints a vivid picture of misplaced priorities. Until such dreams continue to captivate naive investors, the government, and the public with the allure of AI’s capabilities, America’s economic fate hangs in the balance.