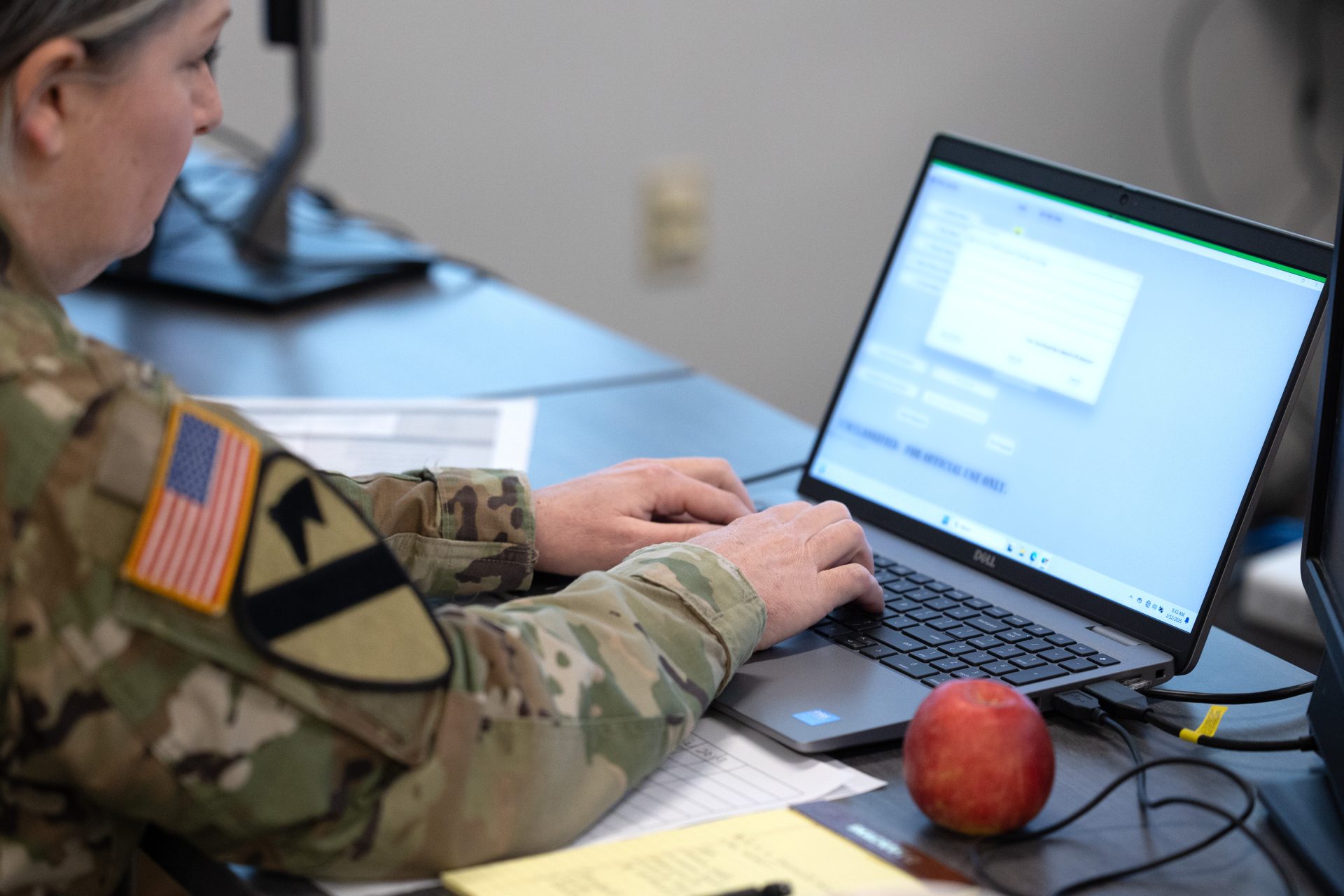

Recently, an intriguing message began to circulate among Army personnel, introducing a promising artificial intelligence tool named VECTOR. This application was said to have the potential to transform how soldiers manage their talents.

VECTOR was hosted on an official Army data analytics platform, with claims that it could assist soldiers in drafting performance evaluations and preparing for promotion boards. By leveraging historical board data, the tool aimed to enhance critical assessments that determine a soldier’s advancement to the next rank.

However, shortly after its introduction, VECTOR was taken down — at least temporarily.

According to a defense spokesperson, the program was not officially authorized by the Army, but rather developed by a non-commissioned officer. Army spokesperson Cynthia Smith confirmed to DefenseScoop that VECTOR’s suspension was a result of an ongoing “compliance review.” She noted that the creator intended for the tool to provide a better understanding of the personnel process but clarified that it did not access any “historic, sensitive data.” When questioned about the removal, Smith reiterated that the program was under review, though she did not comment on the precise details of its widespread dissemination.

The brief existence of VECTOR raises essential questions about the intersection of the military’s swift AI integration and the necessary policy guidelines, according to a military innovation analyst speaking to DefenseScoop. While military and tech leaders have promoted generative AI as a transformative technology on the battlefield, experts have voiced concerns regarding privacy, ethical considerations, and the stability of institutional trust.

The Pentagon is eager for troops to integrate large language models into their daily tasks as part of an “acceleration strategy” aimed at “unleashing experimentation,” as stated by Defense Secretary Pete Hegseth. However, there must also be policies in place to prevent unauthorized AI tools from entering the force, which could carry significant consequences, highlighted Carlton Haelig from the Center for a New American Security’s Defense Program.

“There is a concerted push from both civilian and military leaders to adopt AI across various applications, which is positive for pursuing efficiencies and enhancing capabilities,” Haelig noted. “Yet, the urgency surrounding the adoption of new AI tools may lead to insufficient procedures and regulations, diminishing the quality of necessary oversight.”

VECTOR was created on Army Vantage, a platform developed by Palantir that aims to improve decision-making through the integration of data repositories and machine learning. To access Vantage, military staff and department civilians use a Common Access Card and complete cyber awareness training, enabling them to develop their applications.

The tool was marketed as a means for troops to draft officer and non-commissioned officer evaluation reports. These assessments, submitted by superiors, are vital for determining a soldier’s performance and eligibility for promotion.

Haelig noted that the relatively low-stakes environment surrounding VECTOR indicates a “healthy ecosystem,” particularly as it pertained to administrative tasks. Yet, this situation underscores the critical challenge of regulating unauthorized AI tools in high-stakes scenarios, such as combat, while also encouraging innovative experimentation.

“The goal is to foster individual initiative for AI adoption within the force, allowing servicemen and women to develop innovative and beneficial applications,” he explained. “However, the lack of clear regulations can lead to hasty rollouts of tools that may not meet necessary standards.”

Just a week after the speedy launch of GenAI.mil in December, DefenseScoop reported mixed feedback on the Pentagon’s new hub for commercial AI platforms, partly due to the absence of clear usage guidelines. Initially, the platform featured Google Gemini, with other models from Open AI, Anthropic, and xAI expected to join. Additionally, the Defense Department recently released an AI strategy advocating for prompt and aggressive technology adoption.

The VECTOR message circulated on social media, detailing its capability to generate soldier profiles based on rank, military occupational specialty, position, and unspecified “board data.” It claimed to be trained on numerous talent management regulations, including OER and NCOER report writing policies and professional development guidelines.

By analyzing historical promotion board data and scoring criteria, the message suggested VECTOR could provide soldiers insights into how they compared with peers during these assessments, which are conducted by senior Army leaders

It emphasized compliance with regulations, protection of personally identifiable information, session isolation, and anonymized, aggregated data for board analysis.

Smith, the Army spokesperson, denied that VECTOR accessed any historical or sensitive information.

“If it truly did not have access to historical data, as the Army asserts, then it was likely more of a developmental effort that was not yet ready for implementation,” Haelig commented. “However, if it had accessed historical data, it begs the question: how did it obtain that data without necessary approvals?”

Although VECTOR is currently suspended, its concept holds relevance. An Army first sergeant, responsible for evaluating a number of non-commissioned officers, remarked that it “would be naive to assume that NCOs and officers are not already utilizing AI to assist in writing their evaluations.”

Justin Lynch, a nonresident fellow at the Atlantic Council, stated that a well-designed program could streamline report writing tasks, benefiting those who may not excel in writing skills. “While there are risks involved, an AI tool that supports writers could help mitigate bias in evaluations,” he explained, while stressing the importance of thorough validation and verification processes to ensure alignment with Army standards.

“Security must be prioritized on two fronts,” Lynch emphasized. “First, we must ensure it does not introduce vulnerabilities into Army systems, and second, it should not expose any personally identifiable data from underlying databases.”

“It’s essential to establish adequate policies and safeguards without being overly restrictive, as excessive regulations could discourage innovation,” he added.

Given that talent management is a crucial Army mission aimed at evaluating and retaining quality personnel, it should fundamentally revolve around human-to-human development.

“We must continue to develop our subordinates and mentor our NCOs; leadership is paramount,” the first sergeant asserted. “While such a tool can be beneficial, it must not replace our obligation to cultivate future leaders.”

He indicated that the Army already publishes promotion board guidelines, which provide soldiers with essential information, but a responsible AI tool could serve as a helpful cross-referencing resource.

“In any AI-enhanced process, a human presence is vital,” he concluded. “We need to ensure thorough checks in the evaluation process, reinforcing the necessity for leaders to guide and develop individuals. Solely depending on AI for leadership development is not an option.”