Examining the Economics of AI: The Alarming Findings of Ed Zitron

Ed Zitron has been diving deep into the controversial financial landscape of artificial intelligence (AI), recently making a striking discovery in his latest article, Exclusive: Here’s How Much OpenAI Spends On Inference and Its Revenue Share With Microsoft. If Zitron’s findings hold true, it suggests that large language models like ChatGPT may be much further from economic viability than even the most optimistic projections suggest. This could explain why OpenAI’s CEO, Sam Altman, has been hinting at the need for a bailout.

Background on AI Economics

Through a series of extensively researched articles, Zitron has illustrated a significant disparity between the massive capital expenditures of major AI players and their relatively modest revenues, not to mention profits. His insights into the considerable cash burn and probable capital misallocation in AI echo the critical work of Gary Marcus, who has also highlighted fundamental performance limitations in this field. Here’s a selection of Marcus’ sobering observations:

- 5 recent, ominous signs for Generative AI

- Five signs that Generative AI is losing traction

- Could China devastate the US without firing a shot?

For a succinct overview of the unsustainable economic situation surrounding OpenAI, see the opening passage from Marcus’ article, OpenAI probably can’t make ends meet. A recent exchange highlighted this concern when Sam Altman expressed frustration with investor Brad Gerstner, who questioned how OpenAI planned to cover $1.4 trillion in obligations against only $13 billion in revenue.

By way of context, estimates of the subprime mortgage market often hovered around $1.3 trillion. Historically, even during crises, AAA-rated tranches of mortgage-backed securities mostly retained some value due to underlying asset liquidations. This brings us back to Zitron’s latest revelations.

The Misguided Hope in AI Economics

Many AI proponents in the business media maintain that, even if the giants of AI falter financially, they will nonetheless leave behind substantial assets, much like the construction of railroads or the dot-com bubble’s aftermath. However, this perspective often stems from a naive understanding of the economics of AI. While railroads had hefty fixed costs with minimal variable ones, AI operates under a different paradigm:

- Substantial training costs

- Significant ongoing “inference” costs

These inference costs often exceed training expenditures, posing a fundamental challenge to the economic sustainability of AI technologies. Zitron suggests that OpenAI’s inference costs may be higher than they publicly disclose, while pricing structures for products like ChatGPT may be heavily subsidizing these expenses.

Understanding Inference Costs

To elaborate on inference, let’s refer to a piece by Primitiva Substack titled All You Need to Know about Inference Cost, published in late 2024:

“In the 16 months following the launch of GPT-3.5, the focus was predominantly on training costs, which were staggering. However, after API price reductions in mid-2024, inference costs have come to the forefront, revealing that inference is even more expensive than training.”

According to Barclays, training the GPT-4 series required around $150 million in computational resources, while GPT-4’s cumulative inference costs are expected to soar to $2.3 billion by the end of 2024—15 times the training cost. Additionally, Gary Marcus mentioned in October that GPT-5’s anticipated release in 2024 had not materialized and that its performance has been lacking.

Primitiva notes that the release of GPT-o1 in September 2024 has further escalated compute demands, generating 50% more tokens per prompt than GPT-4o. Inference tokens from GPT-o1 are produced at four times the output rate of GPT-4o, significantly increasing overall costs.

Cost and Capability Trade-offs

Tokens, the smallest units processed by AI models, play a crucial role in inference computing. Each token interacts with model parameters, requiring multiple floating-point operations. The formula for inference computation can be summarized as:

Total FLOPs ≈ Number of Tokens × Model Parameters × 2 FLOPs.

This volume expansion, coupled with a sixfold increase in token pricing for GPT-o1 compared to GPT-4o, results in a staggering 30-fold rise in API costs for similar tasks. Research from Arizona State University indicates that these expenses could spike to as high as 70 times in practical applications.

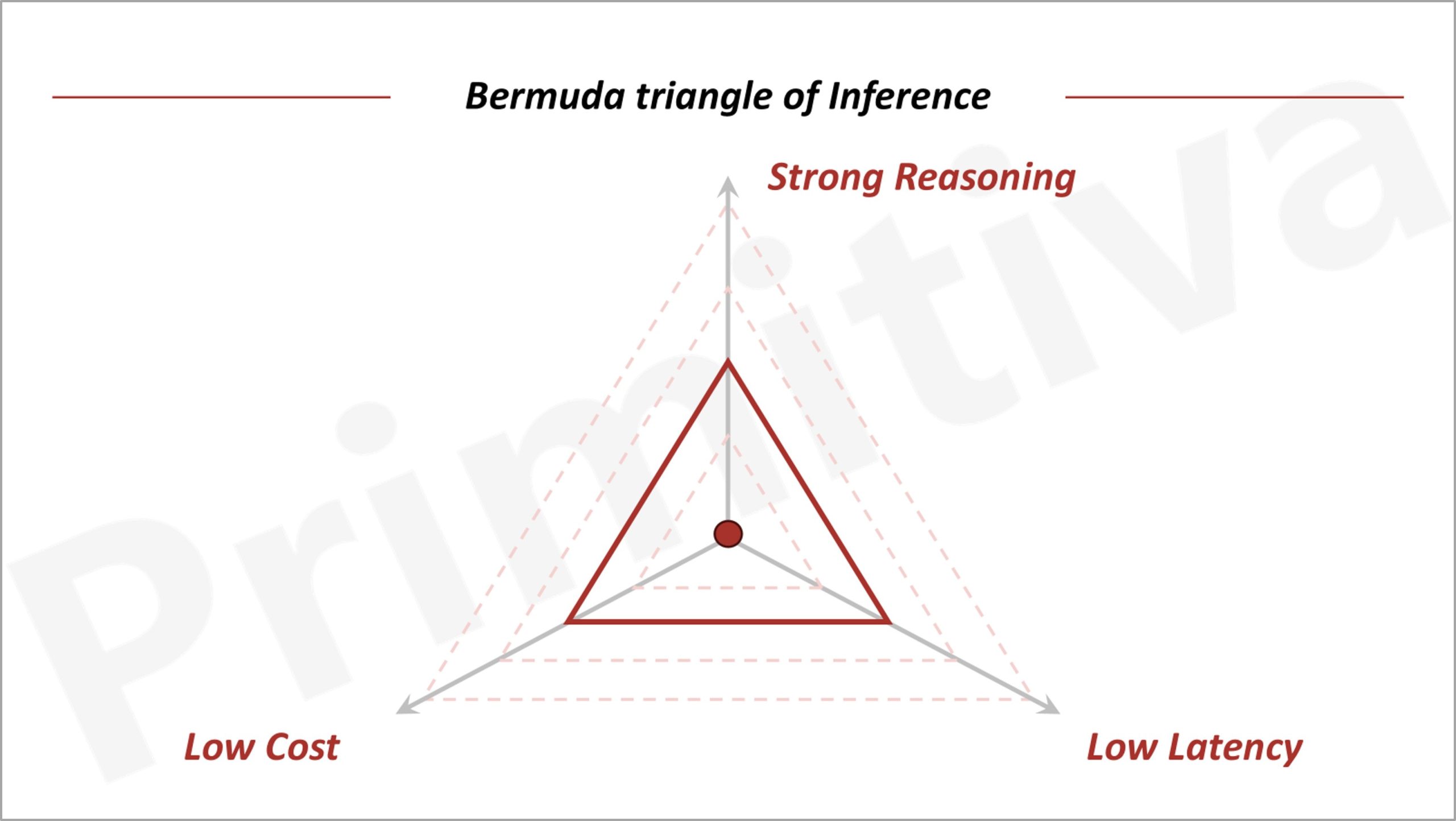

Unsurprisingly, access to GPT-o1 has been restricted to paid subscribers with capped usage. The dramatic rise in costs underscores the challenging relationship between compute expenses, model capabilities, and user experience. This stark trade-off has been encapsulated in the concept of the “Bermuda Triangle of Generative AI”—meaning advancements in one area usually necessitate sacrifices in others.

The Implications of Zitron’s Findings

In light of these revelations, Zitron remains cautiously optimistic regarding the accuracy of his findings. Some brief interactions with OpenAI may offer additional clarity. However, he points out concerning trends in OpenAI’s financials, particularly regarding the inference costs associated with their partnership with Microsoft. Zitron claims to have access to documents indicating that OpenAI’s inference expenses on Microsoft Azure reached $5.02 billion in the first half of 2025, significantly higher than previously reported figures.

To illustrate this alarming trend, OpenAI’s inference spending climbed consistently over the past 18 months, reaching $8.67 billion by September 2025. When juxtaposed with their reported revenues, Zitron argues that inference expenses consistently outstrip earnings.

Reconciling Revenue Streams

Furthermore, documents reviewed by Zitron cast doubt on OpenAI’s reported revenue figures. Microsoft’s share from OpenAI’s earnings in 2024 was notably lower than reported, leading to questions about the transparency of OpenAI’s financial reporting. Altman’s claims of revenues significantly exceeding $13 billion appear increasingly at odds with the evidence at hand.

Given the sensitive nature of these findings, Zitron adopts a more direct approach. The potential disparity between OpenAI’s reported costs and revenues raises serious concerns about its business model and operational viability.

Conclusion

The need for transparency is paramount in understanding the financial landscape of AI. Zitron’s insights challenge existing narratives about the industry’s sustainability and highlight the necessity of clearer communication from companies like OpenAI and Microsoft regarding their operational costs and revenue streams. As the AI landscape continues to evolve, these revelations could have far-reaching implications for stakeholders and the future of AI technology.