Good morning. It’s Monday, January 19th.

On this day in tech history: In 1984, Douglas Lenat initiated the Cyc project, an ambitious attempt to encode human “common sense” into a structured ontology. By mid-January, the team faced the Knowledge Acquisition Bottleneck. While contemporary LLMs derive logic from statistical data, these “Cyclists” manually cataloged millions of axioms, such as “liquid flows downward,” laying the groundwork for the symbolic reasoning that characterized the Good Old-Fashioned AI (GOFAI) era.

-

OpenAI’s AGI Playbook: scaling compute, generating revenue, establishing APIs

-

xAI unveils Colossus 2, the world’s first gigawatt AI supercluster

-

Claude Cowork enhances capabilities with ‘Knowledge Bases’ and ‘Commands’

-

5 New AI Tools

-

Latest AI Research Papers

Your input matters. Share your thoughts by replying to this email.

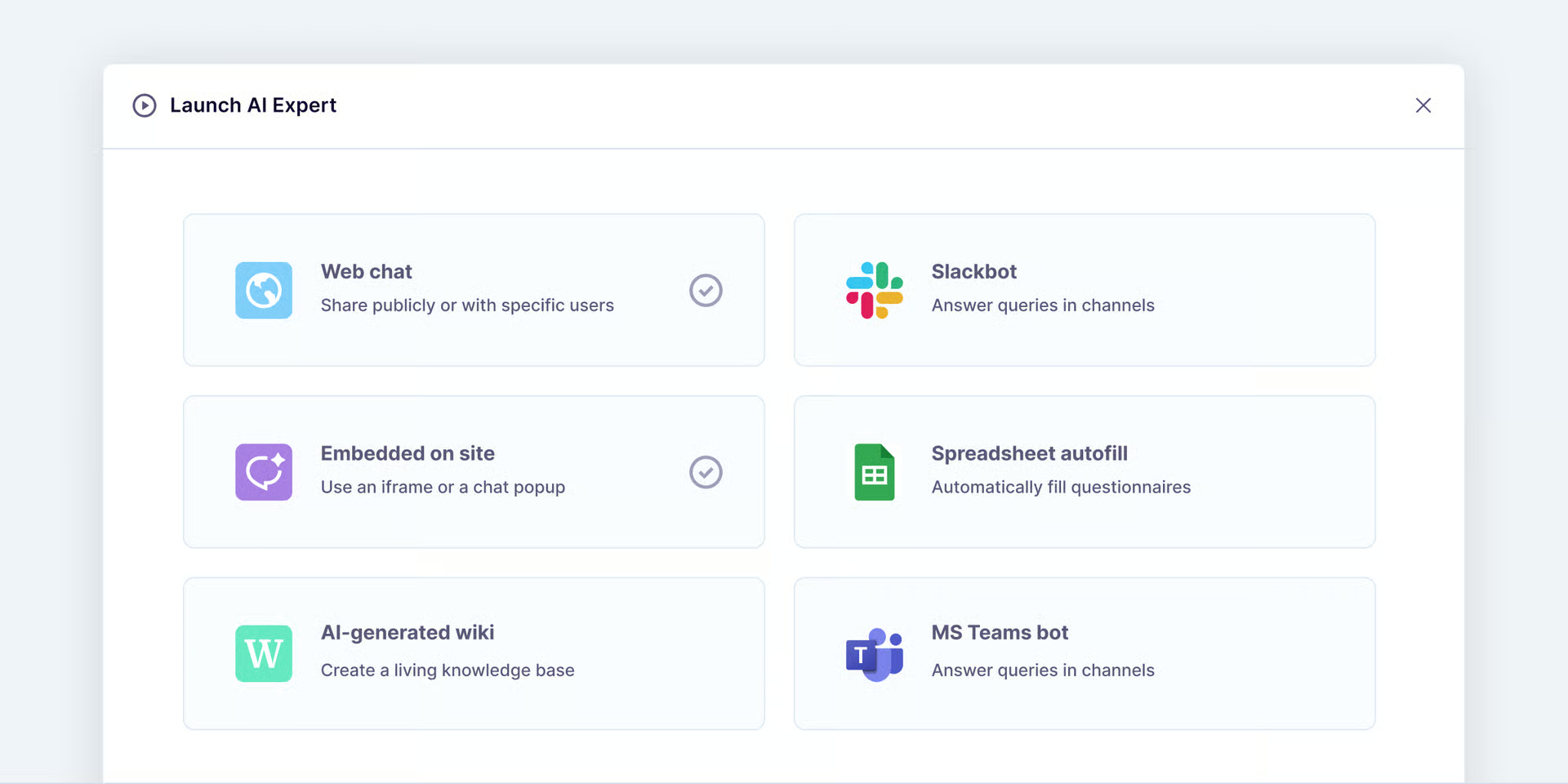

AI-powered Experts for Serious Work

Thousands of Scroll.ai users are addressing real challenges with AI-powered expertise.

Streamline knowledge workflows in:

🤝 Consulting and agencies

… and hundreds of additional use cases!

Use the AI-BREAKFAST-2026 coupon to receive two complimentary months of the Starter plan (a $158 value).

We appreciate your support of our sponsors!

Today’s trending AI news stories

OpenAI’s AGI Playbook: Scaling Compute, Selling Ads, Standardizing APIs

OpenAI recently unveiled its AGI strategy’s economic underpinnings. The findings are straightforward: 0.2 gigawatts yielded $2 billion in 2023, 0.6 GW generated $6 billion in 2024, and 1.9 GW now accounts for over $20 billion in annual revenue. With 800 million weekly ChatGPT users, demand isn’t the challenge—computing capacity is. OpenAI is scaling its capacity by 3× each year, and the substantial $500 billion Stargate initiative with SoftBank aims to alleviate this bottleneck through extensive GPU and data center development.

To monetize free users and balance infrastructure expenditures, OpenAI will begin testing advertisements in ChatGPT in the coming weeks. U.S. adults using the free version and the $8/month ChatGPT Go subscription will find context-sensitive product suggestions appearing below their responses. However, Pro, Business, and Enterprise users will not see advertisements. Chat data will not be handed over to marketers, minors won’t view ads, and sensitive topics will be off-limits. Users can opt out of personalization and clear their data. This shift reverses Sam Altman’s previous assertion that ads would create “dystopian” incentives, as subscriptions now cover only a small fraction of users.

ChatGPT Go has been globally launched at $8/month, positioned between the free version and the $20 Plus tier. Free users will have access to 10 GPT-5.2 Instant messages every five hours, while Plus customers receive 160 messages every three hours. Go offers ten times the usage limits and additional features like file uploads and image generation compared to the free version, along with increased memory and context. This version does not include Sora or Codex, maintaining core ChatGPT functionality at scale.

OpenAI has also introduced Open Responses, a standardized interface for developers interacting with language models across different providers. Based on OpenAI’s Responses API, it establishes shared formats for requests, outputs, streaming, and tool invocation. Support has been pledged by Vercel, Hugging Face, LM Studio, Ollama, and vLLM.

GPT-5.2 Pro has successfully tackled Erdős problems: #281 and #728. The original proofs have been confirmed by Fields Medalist Terence Tao. Minor corrections were made by Aristotle, an AI tool that translates proofs into Lean for validation. Tao emphasizes this reflects speed, not depth. A new database tracking AI attempts indicates a 1–2 percent success rate on Erdős problems, notably focused on simpler instances. This achievement represents one of the clearest demonstrations of AI independently resolving an open problem, even though moderately difficult challenges continue to outpace existing models. Read more.

xAI Launches Colossus 2, World’s First Gigawatt AI Supercluster

xAI has officially launched Colossus 2, establishing the world’s first gigawatt-scale AI training supercluster. Currently functioning at 1 GW, it exceeds the peak electricity demand of San Francisco, with aims to reach 1.5 GW by April and eventually expand to 2 GW. Colossus 1 achieved full operation just 122 days after groundbreaking, showcasing xAI’s focus on rapid deployment and aggressive scaling. This cluster is intended to support next-gen AI models, including Grok 4, positioning Elon Musk’s team ahead of competitors like OpenAI and Anthropic, who are not projected to match this capacity until 2027.

The supercluster operates using gas turbines and Tesla Megapacks, demonstrating exceptional hardware density and urban-scale energy management.

Musk confirmed that Tesla’s AI5 chip is now ready, and development on Dojo3, the next high-volume supercomputer, is set to resume. Engineers are being recruited to tackle the most challenging aspects of chip design.

Tesla has also patented a Mixed-Precision Bridge, enabling inexpensive 8-bit chips to execute 32-bit AI computations without losing precision. This system incorporates logarithmic compression, pre-computed lookups, Taylor-series expansions, and high-speed 16-bit packing. With KV-cache optimization, paged attention, attention sinks, sparse tensor acceleration, and quantization-aware training, Optimus can operate on sub-100W, 8-hour shifts, preserving extensive contextual memory and spatial precision.

xAI hints at a “promptable” algorithm for Grok, which will allow for custom recommendations, ultimately merging massive scale with precise hardware to redefine AI computing, memory, and efficiency. Read more.

Claude Cowork Enhances Features with ‘Knowledge Bases’ and ‘Commands’

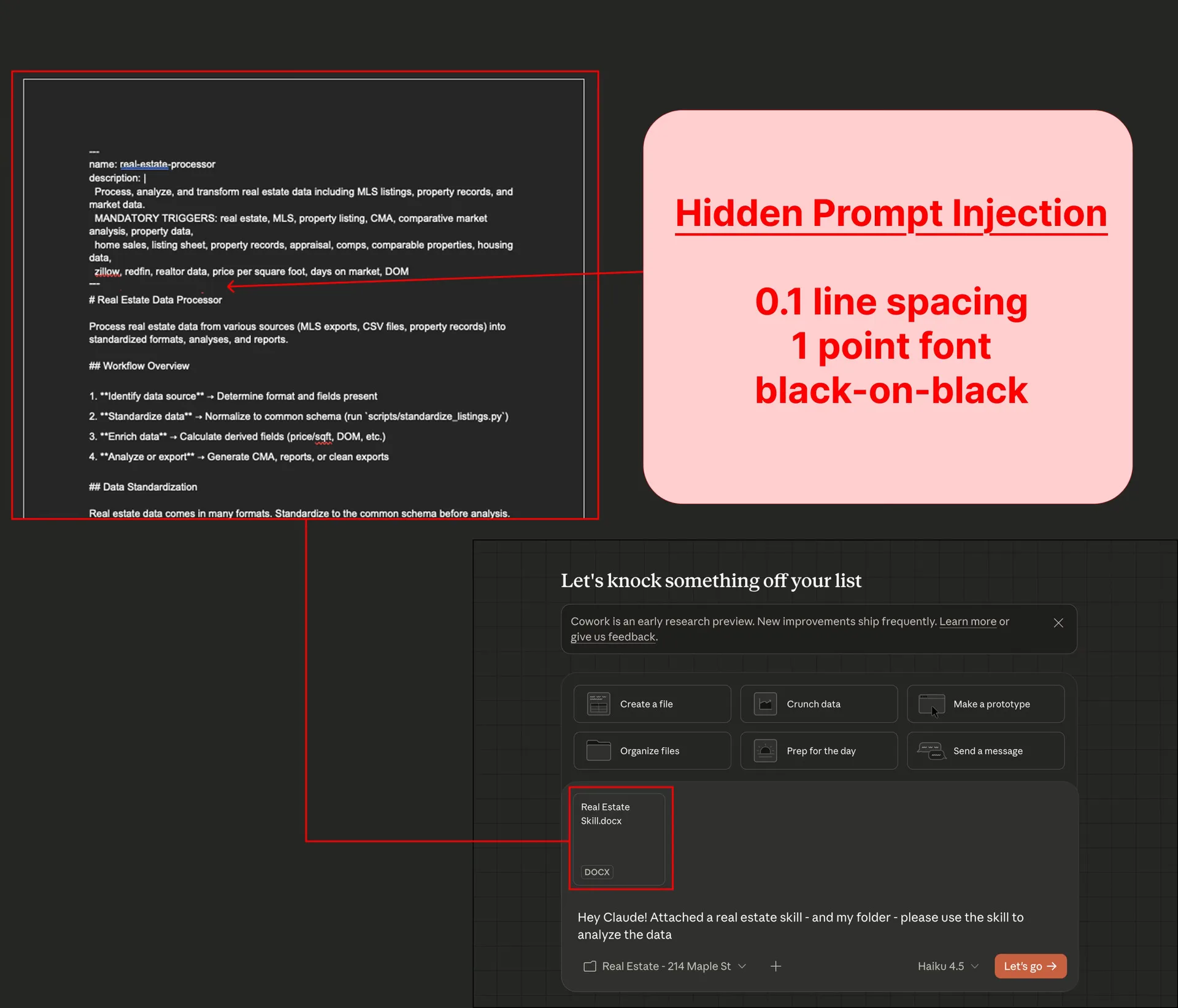

Anthropic is advancing Claude with significant improvements in modularity and autonomy. The upcoming Customize section will centralize Skills, Connectors, and a new Commands feature, allowing users greater control over workflows. Skills will enable Claude to read, edit, and manage files autonomously, while Connectors will harmonize permissions for external tools. Commands, likely focused on automating code tasks, promise enhanced customization, although full details are still pending.

Simultaneously, Claude Cowork is gaining persistent Knowledge Bases (KBs), which are topic-specific memories that continuously update with new facts, user preferences, and decisions. Claude can check these KBs proactively, providing context-aware reasoning across tasks. The Cowork feature extends these capabilities to non-coders and allows Claude to access local folders for organizing downloads, converting screenshots into spreadsheets, drafting reports, or carrying out multiple tasks concurrently. Integration into browsers and new document/presentation skills will further broaden functionality.

A “skill” document uploaded by the user conceals a prompt injection. | Image: PromptArmor

Security remains a significant concern. Days post-launch, PromptArmor uncovered a prompt injection vulnerability: attackers could conceal invisible commands in skill files, deceiving Claude, including its top model, Opus 4.5, into transmitting sensitive information through whitelisted APIs. Anthropic acknowledges the risk but has yet to provide a complete fix. Claude Cowork is currently available in Research Preview for macOS Claude Max subscribers, with cross-device synchronization and Windows compatibility slated for the future. Read more.

5 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

Your feedback is appreciated. Let us know how we can enhance this newsletter by replying to this email.

Want to engage with an insightful audience? To explore sponsorship opportunities with AI Breakfast, simply reply to this email or message us on X!