Good morning. It’s Monday, January 5th.

On this day in tech history: In 2001, Leo Breiman released “Statistical Modeling: The Two Cultures,” which distinguished between data modeling and algorithmic modeling. Although it wasn’t explicitly linked to AI, the paper anticipated many contemporary debates in machine learning: interpretability versus performance, and theory versus scale. Breiman’s insights validated the concept of black-box learning at a time when computational power and datasets were experiencing significant growth.

-

OpenAI’s ‘Gumdrop’ could be an AI that lives in a pen-sized device

-

Google engineer says AI built in one hour what took her team a year

-

2026 belongs to recursive language models scaling to 10M tokens

-

5 New AI Tools

-

Latest AI Research Papers

You read. We listen. Please share your thoughts by replying to this email.

How could AI help your business run smoother?

We create and implement tailored AI workflows that automate administrative tasks, enable proprietary data analysis, and provide genuine efficiency improvements. Start with a free consultation to identify where AI can be beneficial — and where it may not be necessary.

Today’s trending AI news stories

OpenAI’s ‘Gumdrop’ could be an AI that lives in a pen-sized device

OpenAI is preparing to introduce its first consumer-grade AI gadget, Project Gumdrop, currently in production with Foxconn either in Vietnam or the US. This compact device, designed to resemble a smart pen or portable audio tool, will integrate a microphone and camera to record handwritten notes and send them directly to ChatGPT.

Comparable in size to an iPod Shuffle, Gumdrop aims for portability and ease of use. Its launch is anticipated for 2026-2027. Several technical hurdles are on the horizon, including software imperfections, privacy risks, and incomplete cloud infrastructure, but Foxconn is set to oversee the entire supply chain, from cloud infrastructure to consumer logistics.

Currently, OpenAI’s UAE Stargate facility is ramping up its AI computational capacity with four 340MW Ansaldo Energia AE94.3 gas turbines. The extreme heat of the desert limits the output to 1GW instead of the expected 1.3GW, but the Phase 1 target of 200MW by the end of 2026 is on track. Meanwhile, GPU deployment is proceeding smoothly, a contrast to the delayed rollout at the Texas site in Abilene.

Co-founder Greg Brockman recently donated $25 million to Trump’s MAGA Inc., part of a larger $102 million fundraising effort in the latter half of 2025. Analysts speculate that major contributors might be aiming to influence federal AI policy, which the Trump administration intends to centralize. Brockman’s involvement in “Leading the Future” illustrates the growing intersection of AI leadership with political aims. Learn more.

Google engineer says AI built in one hour what took her team a year

Google engineer Jaana Dogan reported that Anthropic’s Claude Code created a distributed agent orchestration system in just one hour — a project her team had labored over for more than a year. Using minimal prompts, it produced a functional prototype, though not ready for production. Claude Code’s developer, Boris Cherny, suggests incorporating self-checking loops, parallel agents, and tools like Slack, BigQuery, and Sentry to enhance production output. This breakthrough demonstrates that AI-assisted coding has advanced from single-line completions in 2022 to complete codebase orchestration by 2025.

Google’s Gemini 3.0 Pro also showcased its advanced capabilities by decoding handwritten notes in the 1493 Nuremberg Chronicle. It effectively integrated paleography, historical context, and biblical timelines to analyze the marginalia as calculations that relate the Septuagint and Hebrew Bible chronologies.

Despite some minor mathematical errors, the analysis was remarkably accurate and well-researched, demonstrating that large models can indeed uncover centuries of accumulated knowledge. Read more.

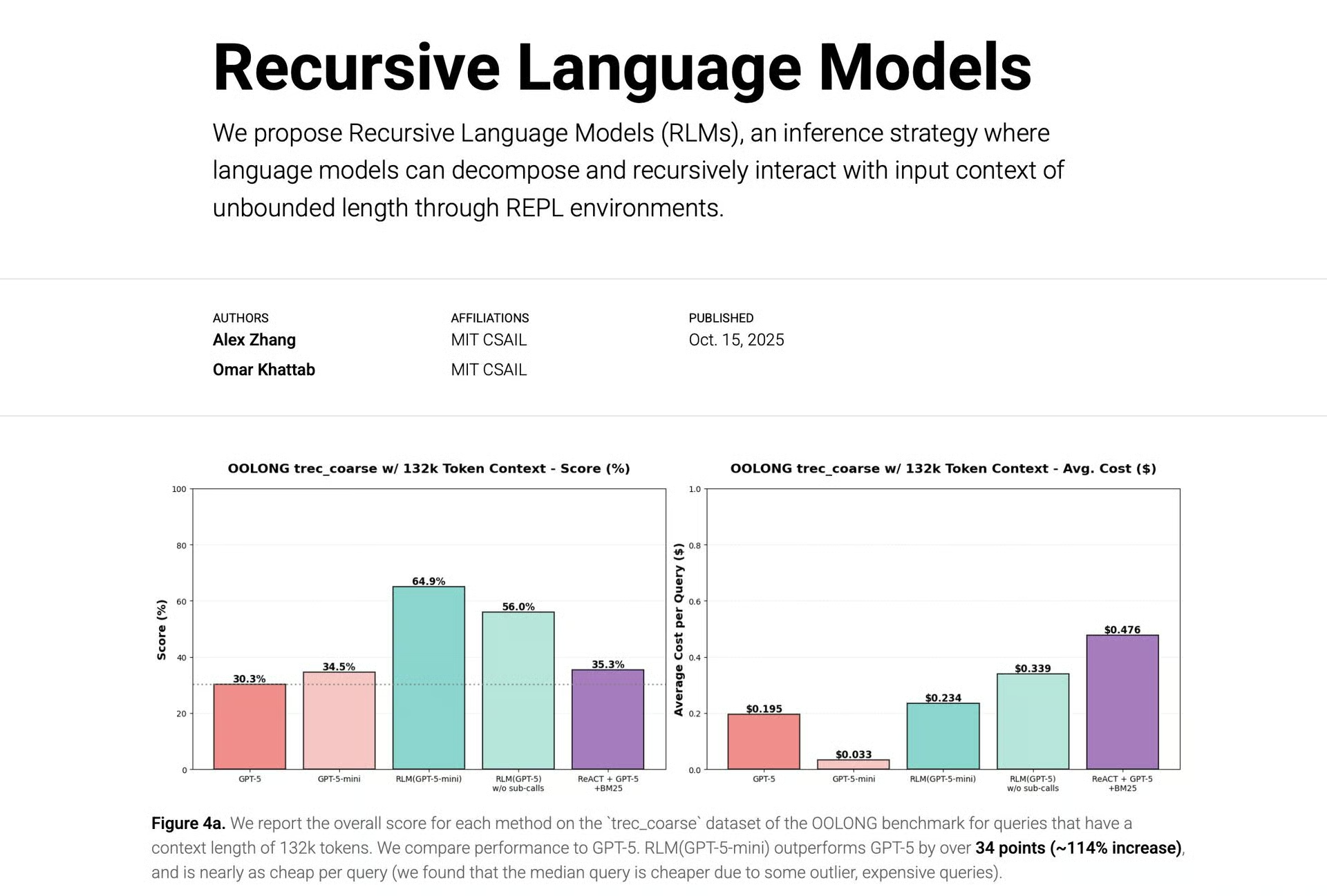

2026 belongs to recursive language models scaling to 10M tokens

AI is on the verge of shattering the limits of contextual understanding. MIT researchers have made the comprehensive paper on Recursive Language Models (RLMs) available, featuring significantly broader experiments than their preliminary blog post from last year.

Recursive Language Models (RLMs) are set to dominate in 2026. Unlike conventional models that struggle with extensive context, RLMs transform the input into code that can be manipulated. A base model like GPT-5 can execute Python REPL commands, divide large contexts, deploy sub-models, cache results, and seamlessly integrate aggregated outcomes.

This innovative approach allows for accurate reasoning over 10 million tokens, a massive increase from current caps, all while maintaining coherence. Benchmarks such as S-NIAH, BrowseComp-Plus, and OOLONG show how RLMs outperform traditional retrieval mechanisms on complex tasks. Prime Intellect’s RLMEnv isolates tool usage within sub-models, allowing the master model to ensure clear and logical reasoning.

According to Alex Zhang, this represents a true shift from probabilistic guessing to structured, programmable reasoning. Large language models are now capable of adjusting their own context for in-depth, structured outputs. Read more.

Can you scale without chaos?

It’s peak season, so volume is about to spike. Many teams either resort to hiring temporary workers (which is costly) or risk burning out their staff (which is even worse).

See what smarter teams do

: let AI manage predictable workloads so your team can focus on high-value tasks.

5 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

Your feedback is valuable. We would love to hear your thoughts on how we can enhance this newsletter. Please respond to this email.

Interested in reaching knowledgeable readers like you? To inquire about becoming a sponsor for AI Breakfast, reply to this email or send us a direct message on 𝕏!