Good morning! Today is Friday, January 2nd.

On this day in tech history: In 1980, Digital Equipment Corp launched XCON (eXpert CONfigurer), a groundbreaking expert system for configuring VAX computers, which saved the company millions of dollars each year. This success illustrated the practical utility of rule-based AI during a time of considerable hype, successfully automating 80% of orders. It utilized over 10,000 rules, hinting at the knowledge graphs in modern large language models (LLMs).

-

The Screen Is Dying – And OpenAI Is Building What Comes Next

-

Deepseek Publishes Fundamental Breakthrough in Transformer Architecture

-

Alibaba Bets on Openness with Qwen-Image-2512, a Rival to Google’s Nano Banana Pro

-

5 New AI Tools

-

Latest AI Research Papers

You read. We listen. Share your thoughts by replying to this email.

How can AI streamline your business operations?

We develop tailored AI solutions that automate administrative tasks, help you analyze proprietary data, and enhance operational efficiency. Start with a complimentary consultation to discover where AI can be most beneficial for your business—and where it may not be necessary.

Today’s leading AI news topics

The Screen Is Dying – And OpenAI Is Building What Comes Next

The primary challenge facing AI today isn’t a lack of data or computing power; it’s the screen-dominated interfaces that demand our complete focus. OpenAI is addressing this issue by reorganizing its teams to tackle a critical problem: their audio models need enhancement to support real-time agents or audio-first devices.

According to reports from The Information, OpenAI has merged its engineering, product, and research divisions over the last two months to rectify ongoing challenges with accuracy, latency, and responsiveness—areas where voice still lags behind written text.

This new team is tasked with developing an audio system that enables quick, interactive conversations, moving away from rigid query-response patterns and paving the way for hands-free AI devices. Kundan Kumar, a former researcher at Character.AI, is leading this initiative, with an internal release slated for the first quarter of 2026.

This project aligns closely with OpenAI’s hardware goals following its $6.5 billion acquisition of a startup backed by Jony Ive, which focuses on audio. Greg Brockman envisions the year 2026 as pivotal for enterprise interaction and scientific advancements, with voice technology emerging as central to both.

Yet, maintaining this leadership comes with a high price. OpenAI’s average stock compensation per employee stands at $1.5 million, with equity projected to account for 46% of the total revenue in 2025. The company anticipates an increase of $3 billion in compensation costs annually through 2030, highlighting the importance of employee retention amid intense competition, particularly against the substantial offers presented by Meta. Read more.

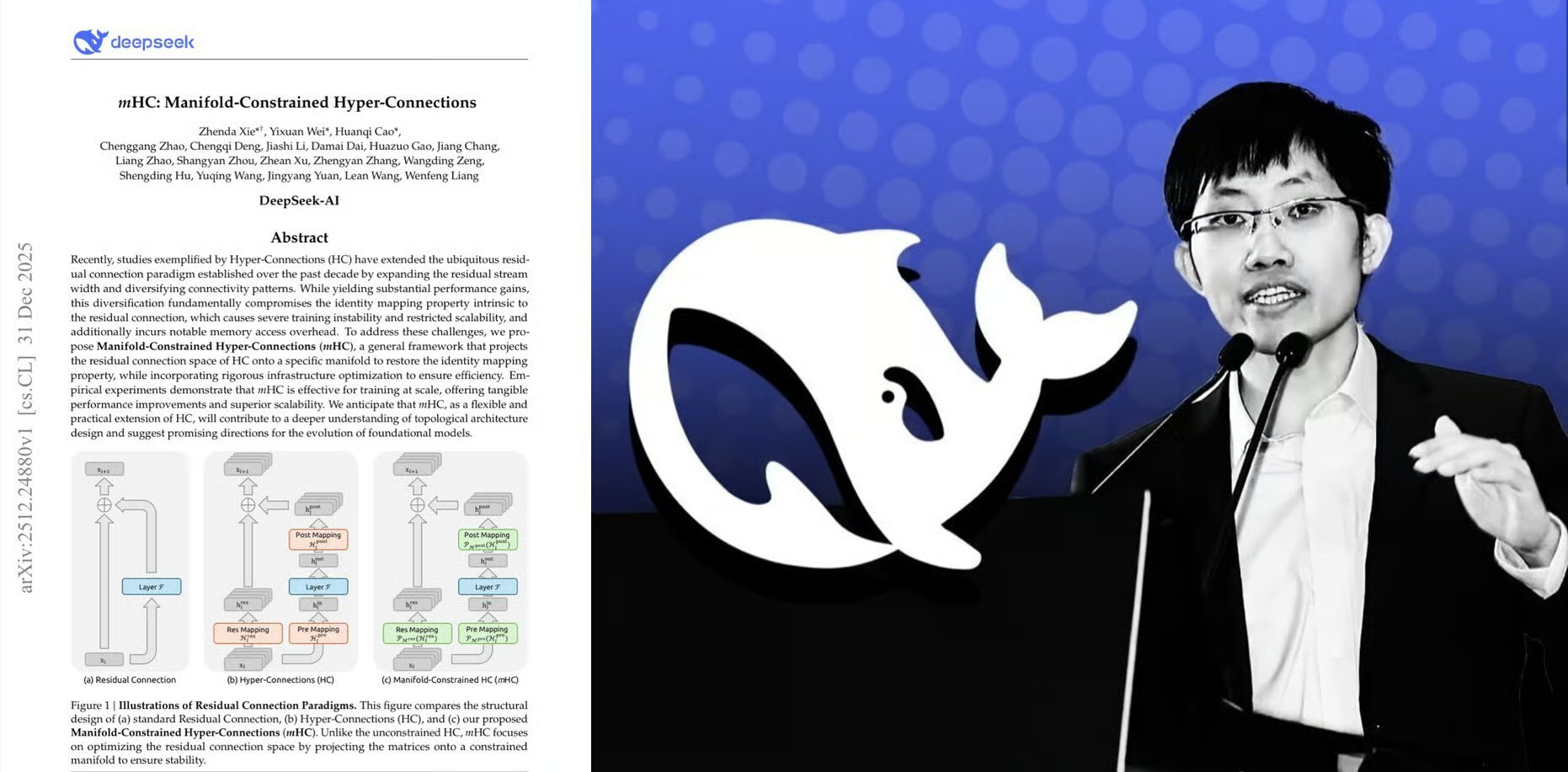

Deepseek Reveals Major Breakthrough in Transformer Architecture

DeepSeek is kicking off 2026 by redefining the landscape of large-model training. The Chinese AI startup, founded by Liang Wenfeng, has introduced its Manifold-Constrained Hyper-Connections (mHC), a sophisticated adaptation of residual connections and ByteDance’s Hyper-Connections designed to stabilize gradients across expansive networks.

The mHC approach employs manifolds—complex, multi-dimensional structures—to ensure consistent gradient flow, even in models with parameters ranging from 3 billion to 27 billion. Initial tests demonstrate accelerated learning rates and enhanced performance across various benchmarks, paired with a minimal hardware overhead of just 6.27%, thereby making large-scale training significantly more manageable.

In summary, this methodology allows for the development of deep, stable models without exceeding memory or computation limits.

By publishing this research on arXiv, DeepSeek is showcasing how its forthcoming major model will be engineered, combining transparency with significant technical advancements. Read more.

Alibaba Invests in Openness with Qwen-Image-2512, a Competitor to Google’s Nano Banana Pro

Alibaba is positioning itself strategically within the enterprise AI sector with the launch of its Qwen-Image-2512, aimed to serve as an open-source alternative to Google’s Gemini 3 Pro Image. The latter recently elevated standards for text-rich, production-ready visuals yet confines those innovations within a closed cloud system.

Qwen’s proposition is straightforward: deliver essential capabilities, including sharp text rendering, layout control, and realism, while simultaneously eliminating vendor lock-in. Released under the Apache 2.0 license, Qwen-Image-2512 is designed for self-hosting, modifications, and commercial deployment, thereby granting enterprises greater control over costs, data sovereignty, and localization.

The model excels in producing human-like realism, accurate material textures, and trustworthy multilingual text outputs, achieving top rankings in Alibaba’s human evaluations of open-source image models. For those who prefer convenience, Alibaba also provides managed inference services at $0.075 per image. Read more.

5 new AI-driven tools trending online

arXiv is a free online repository where researchers can share pre-published work.

Your feedback matters. Reply to this email and let us know how we can enhance this newsletter experience.

Looking to connect with intelligent readers? Become a sponsor for AI Breakfast by replying to this email or reaching out to us on 𝕏!