State Laws on AI Regulation

Understanding the evolving legal landscape surrounding artificial intelligence is crucial as various states implement regulations to govern its development and usage. This article provides an overview of the current state-level restrictions on AI, examines the impact of a recent executive order signed by former President Donald Trump, and discusses the implications for innovation and public safety.

According to Anjana Susarla, Professor of Information Systems at Michigan State University and originally published in The Conversation, President Trump signed an executive order on December 11, 2025, aimed at overriding state laws concerning artificial intelligence that the administration views as impediments to innovation.

As the adoption of generative AI systems like ChatGPT grows, so too do state-level regulations. In 2025 alone, 38 states enacted various laws to govern AI, addressing issues ranging from AI-enabled stalking to manipulation of human behavior.

The executive order establishes a national framework for AI that is described as “minimally burdensome.” It instructs the U.S. Attorney General to create a task force to challenge state laws that contradict this directive. Additionally, it mandates the Secretary of Commerce to identify and withhold funding from states implementing laws deemed excessively restrictive, exempting laws related to child safety.

The Role of Federal Law

Executive orders serve as guidelines for federal agencies to interpret and implement existing laws. This particular order aims to encourage federal departments to act in ways the administration believes align with its legal framework.

Tech Industry Influence

Major tech firms have heavily lobbied for the government to preempt state regulations, arguing that compliance with divergent laws stifles innovation.

State Regulations: A Balancing Act

Proponents of state regulations argue they seek to balance public safety with economic growth. Key states, including California, Colorado, Texas, and Utah, have enacted significant laws addressing AI. Below are some notable regulations:

Algorithmic Discrimination

Colorado’s Consumer Protections for Artificial Intelligence represents the first comprehensive state law aimed at regulating AI systems in crucial sectors such as employment, housing, and healthcare. Though its enforcement is pending, the law requires organizations using predictive AI systems to assess the impacts and inform consumers when AI plays a role in critical decisions affecting them.

Similarly, Illinois has introduced a law set to take effect on January 1, 2026, that amends the Human Rights Act to prohibit discriminatory practices enabled by AI tools.

Frontier AI Models

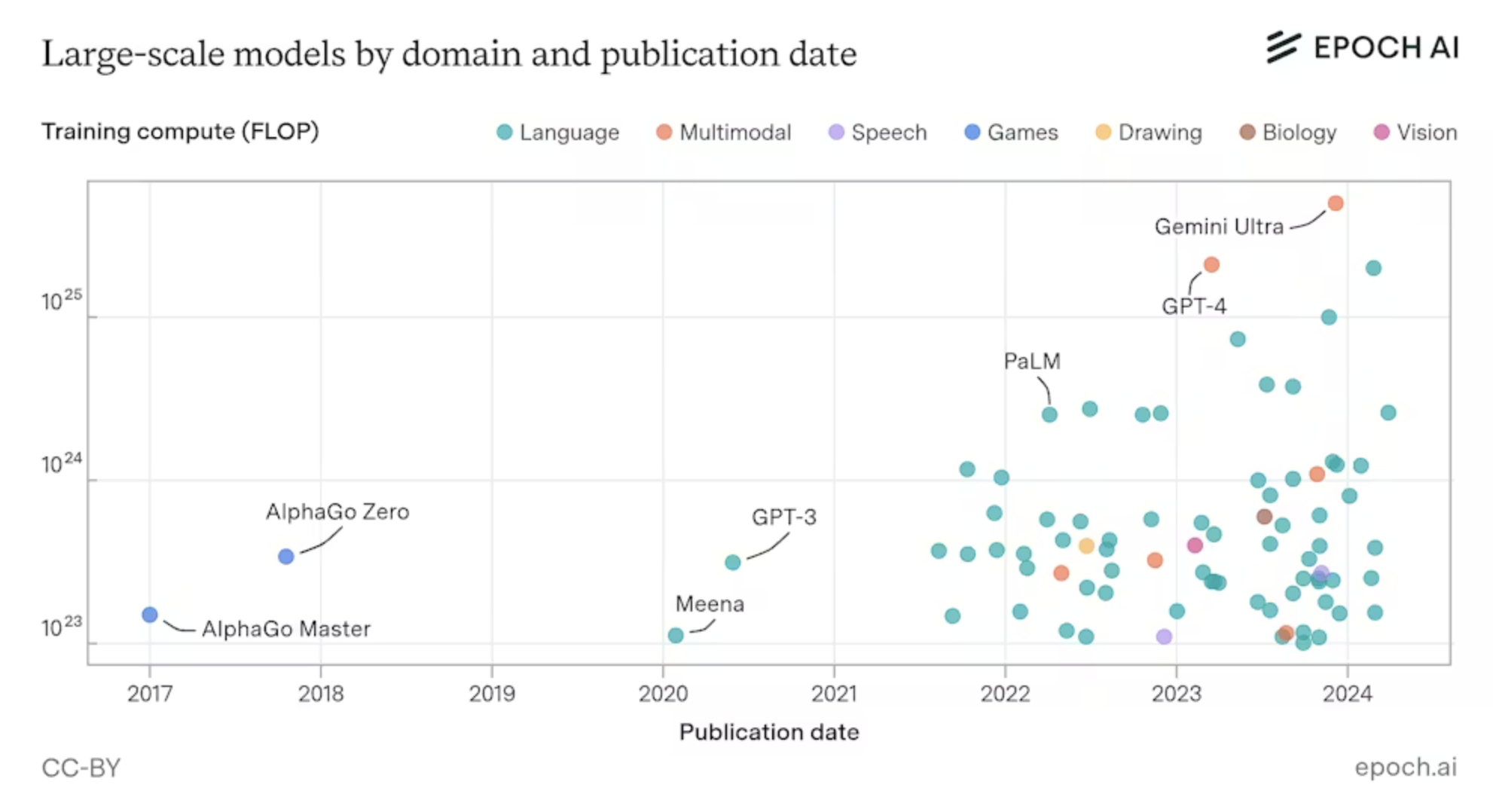

California’s Transparency in Frontier Artificial Intelligence Act establishes regulations surrounding the most complex AI systems, often referred to as foundation models. These models, which can adapt to various tasks without further training, require substantial resources to develop.

Developers of these high-cost AI models must detail their adherence to national and international standards and submit assessments regarding catastrophic risks. The law also mandates a system for reporting critical safety incidents.

Disclosures and Accountability

Texas has introduced the Texas Responsible AI Governance Act, which restricts the deployment of AI systems that could manipulate behavior. Notably, it allows for a “sandbox” environment where developers can safely test AI systems.

Meanwhile, Utah’s Artificial Intelligence Policy Act requires organizations using generative AI tools to disclose this information to consumers, ensuring accountability for any resulting harms.

Other Legislative Efforts

States are actively exploring additional measures to safeguard citizens from AI risks. For instance, Florida’s Governor Ron DeSantis opposes federal encroachment on state AI laws and has proposed a Florida AI Bill of Rights to counteract the technology’s inherent dangers.

Additionally, attorneys general from 38 states have urged major AI companies to rectify problematic outputs that may lead users to form misplaced trust in these systems.

The Uncertain Path Ahead

The future impact of the executive order remains uncertain, as many observers argue it oversteps legal boundaries, given that only Congress holds the authority to override state laws. Nonetheless, the order concludes with a directive for federal officials to propose relevant legislation.

As states continue to craft their own regulations around AI, the dialogue surrounding the balance of innovation, safety, and accountability will undoubtedly continue to evolve.