As discussions regarding the implications of artificial intelligence (AI) gain traction, it’s crucial to examine the systemic risks it poses. Recent commentary in the media reflects growing concerns surrounding AI’s potential negative impacts. This article juxtaposes a lighthearted narrative from Time magazine that downplays these risks with a more serious analysis from VoxEU experts, shedding light on the pressing need for a proactive approach to AI governance.

In Time’s piece, The World Is Not Prepared for an AI Emergency, the scenario is painted as one of panic rather than assessing the fundamental failures that could occur and may not be easily rectified:

Imagine waking up to a world where the internet flickers, card payments malfunction, ambulances are dispatched to incorrect locations, and emergency broadcasts lose credibility. Be it due to a flawed model, malicious intent, or a cascading cyber event, an AI-induced crisis can rapidly traverse borders.

The initial signs of such an AI emergency may be indistinguishable from a general outage or security breach. It may take time, if ever, for it to be confirmed that AI systems played a significant role.

While some governments and corporations are beginning to establish safeguards against these risks, such as the European Union AI Act and the U.S. National Institute of Standards and Technology framework, a critical gap persists. Existing technical protocols for restoring networks or addressing hacking attempts do not encompass strategies to mitigate social panic or preserve trust and communication in a crisis driven by AI.

Preventing an AI crisis is just part of the challenge. Equally important is the framework for preparedness and response. Who determines the point at which an AI incident escalates to an international emergency? Who delivers clear messages to the public amid the chaos of misinformation? How do we ensure continued dialogue between governments when traditional communication lines falter?…

We do not require intricate new institutions to manage AI; rather, we need governments to develop proactive strategies.

This analysis highlights that risks associated with AI are multifaceted and often amplify existing vulnerabilities, emphasizing the urgent necessity for preventive measures.

By Stephen Cecchetti, Rosen Family Chair in International Finance at Brandeis International Business School; Vice-Chair, Advisory Scientific Committee European Systemic Risk Board; Robin Lumsdaine, Crown Prince of Bahrain Professor of International Finance, Kogod School of Business American University; Professor of Applied Econometrics, Erasmus School of Economics Erasmus University Rotterdam; Tuomas Peltonen, Deputy Head of the Secretariat European Systemic Risk Board; and Antonio Sánchez Serrano, Senior Lead Financial Stability Expert European Systemic Risk Board. Originally published at VoxEU

While artificial intelligence brings considerable advantages, such as accelerating scientific advancement, boosting economic growth, enhancing decision-making, and improving healthcare, it also raises significant concerns regarding risks to financial systems and society. This column delves into how AI interacts with main sources of systemic risk and proposes a combination of competition and consumer protection policies, along with adjustments to regulatory frameworks, to address these vulnerabilities.

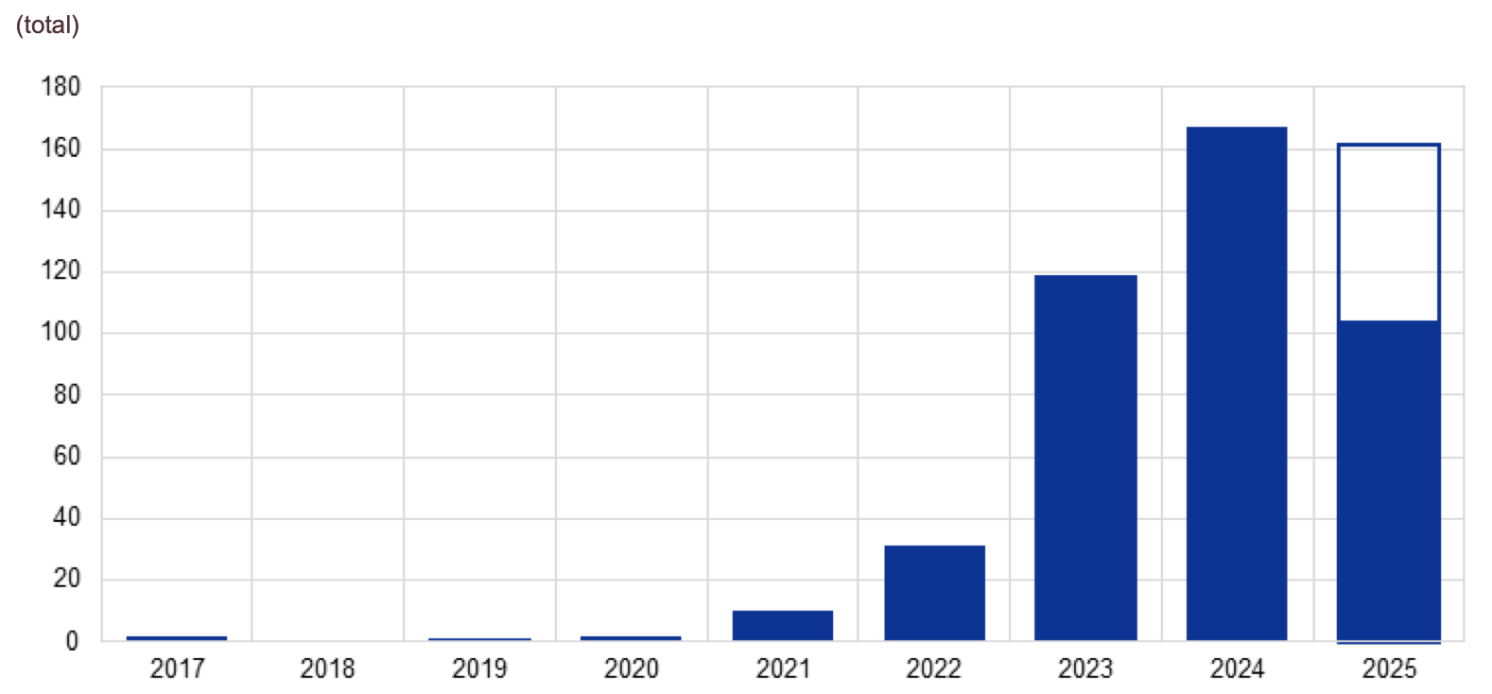

Recently, there has been notable corporate investment in large-scale AI models—those requiring over 10^23 floating-point operations—such as OpenAI’s ChatGPT, Anthropic’s Claude, Microsoft’s Copilot, and Google’s Gemini. Although OpenAI does not disclose specific user numbers, estimates indicate ChatGPT has approximately 800 million active users weekly. Figure 1 illustrates the rapid growth in the release of large-scale AI systems since 2020. The intuitive nature of these tools likely contributes to their swift adoption, as companies actively integrate them into everyday operations.

Figure 1 Number of large-scale AI systems released per year

Notes: Data for 2025 up to 24 August. The white box in the 2025 bar reflects an extrapolation based on available data for the entire year.

Source: World in Data.

There is an expanding body of literature investigating how the rapid advancement and widespread adoption of AI can impact financial stability (references include Financial Stability Board 2024, Aldasoro et al. 2024, Daníelsson and Uthemann 2024, Videgaray et al. 2024, Daníelsson 2025, and Foucault et al. 2025). A recent report from the Advisory Scientific Committee of the European Systemic Risk Board (Cecchetti et al. 2025) explores how AI technologies interact with systemic risks and discusses regulatory implications by identifying market failures and externalities.

The Development of AI in Our Societies

AI, which includes both advanced machine-learning models and sophisticated large language models, has the capability to resolve complex issues and transform resource allocation. Its general applications range from supporting decision-making and simulating extensive networks, to summarizing massive datasets and optimizing complex problems. Notably, AI facilitates productivity enhancements through automation, improving task completion efficiency, and enabling new tasks—some of which may not yet be envisioned. Nevertheless, current assessments of AI’s overall productivity impact remain modest. A recent study of the U.S. economy conducted by Acemoglu (2024) estimates its effect on total factor productivity (TFP) to be a mere 0.05% to 0.06% annually over the next decade, a small increment compared to the historical average TFP growth of around 0.9% per year over the past 25 years.

Analyses reveal a varied impact across different segments of the labor market. For example, Gmyrek et al. (2023) evaluated 436 occupations and identified four categories: those least likely to be affected by AI (primarily manual and unskilled jobs), roles where AI can augment tasks (such as photographers and primary school teachers), positions with uncertain impacts (including financial advisors and journalists), and those most at risk from AI replacement (e.g., accounting clerks and bank tellers). Their findings suggest that approximately 24% of clerical tasks are highly susceptible to AI, with an additional 58% facing moderate exposure.

AI and Sources of Systemic Risk

The report underscores that AI’s capability to process vast amounts of unstructured data and interact intuitively with users can both supplement and replace human functions. Nonetheless, utilizing these technologies comes with inherent risks, including challenges in detecting AI errors, biased decision-making due to training data, overdependence on AI due to misplaced trust, and complications in overseeing complex systems.

As with any technological advancement, the crux of the issue lies not in AI itself, but in how individuals and organizations choose to develop and employ these tools. In financial contexts, the utilization of AI by investors and intermediaries may introduce externalities and spillover effects.

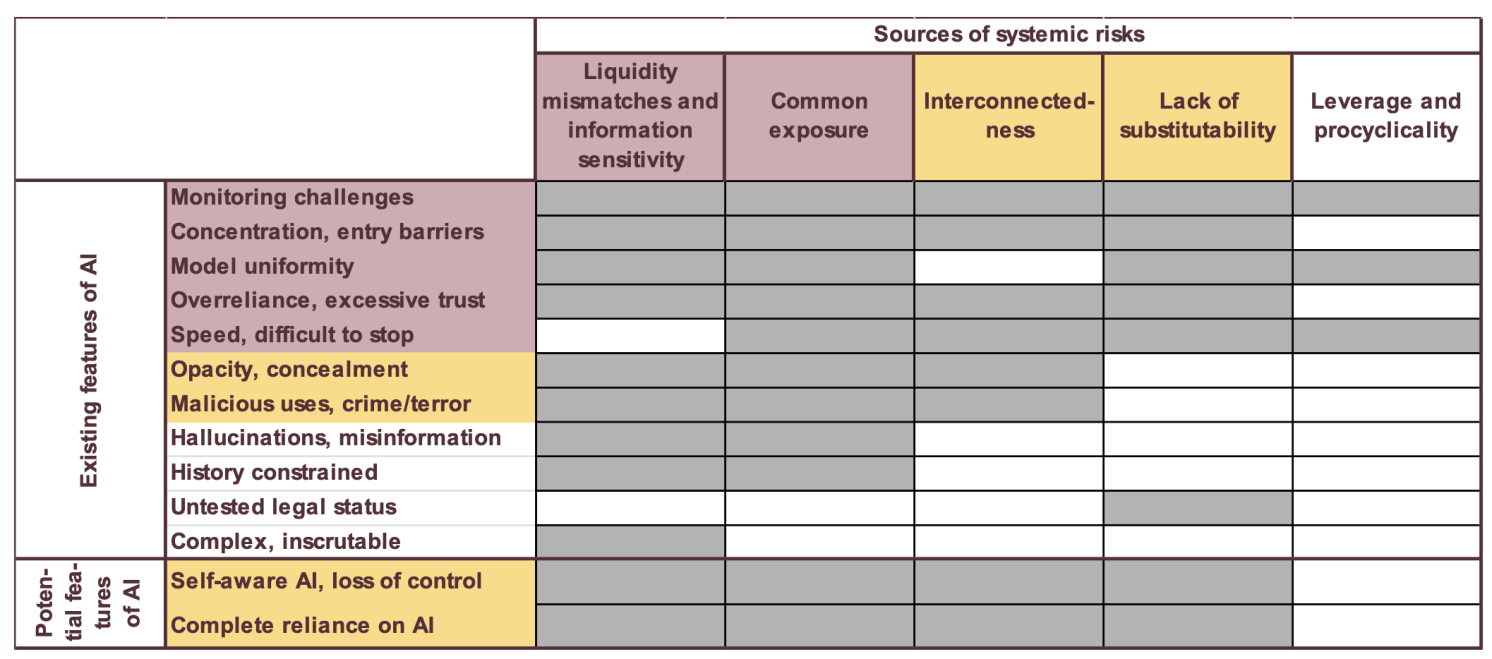

Thus, we analyze how AI may amplify or transform existing systemic financial risks, as well as introduce new ones. We categorize these systemic financial risks into five types: liquidity mismatches, common exposures, interconnectedness, lack of substitutability, and leverage. As shown in Table 1, the features of AI that may exacerbate these risks comprise:

- Monitoring challenges where the complexity of AI systems complicates effective oversight for both users and regulators.

- Concentration and entry barriers leading to a handful of AI providers establishing single points of failure and extensive interconnectedness.

- Model uniformity wherein widespread adoption of similar AI models may lead to correlated exposures and intensified market reactions.

- Overreliance and excessive trust stemming from high initial performance, resulting in increased risk-taking and diminishing oversight efforts.

- Speed in transactions and responses that heightens procyclicality and complicates efforts to mitigate adverse dynamics.

- Opacity and concealment, where the complexity of AI undermines transparency and enables intentional information obfuscation.

- Malicious uses, as AI can bolster the capacity for fraud, cyber-attacks, and market manipulation by bad actors.

- Hallucinations and misinformation, where AI may produce false or misleading content, leading to widespread poor decision-making and market volatility.

- History constraints, as AI’s dependence on prior data may hinder its ability to anticipate unforeseen ‘tail events,’ fostering excessive risk-taking.

- Untested legal status concerning unclear legal liabilities associated with AI actions, which may pose systemic risks if providers or financial entities encounter legal obstacles.

- Complexity rendering the system difficult to decipher, which can provoke panic when users discover flaws or unexpected behaviors.

Table 1 How current and potential features of AI can amplify or create systemic risk

Notes: Titles of existing AI features are highlighted red if they contribute to four or more systemic risk sources and orange for three. Potential features of AI are marked orange to indicate uncertainty. Columns denoting sources of systemic risk are colored red if they relate to ten or more AI features, and orange when related to six to ten.

Source: Cecchetti et al. (2025).

Unforeseen capabilities, such as the emergence of self-aware AI or complete dependence on AI, could further amplify these risks and introduce challenges linked to loss of human control and extreme societal reliance, albeit these scenarios remain speculative at present.

Policy Response

To address these systemic risks and associated market failures—such as fixed costs, network effects, and information asymmetries—it is vital to critically evaluate competition and consumer protection policies, alongside macroprudential policies. Some essential policy suggestions include:

- Regulatory adjustments like recalibrating capital and liquidity requirements, enhancing circuit breakers, modifying regulations on insider trading and other market abuses, and refining central bank liquidity provisions.

- Transparency requirements that involve labeling financial products to clarify AI usage.

- ‘Skin in the game’ and ‘level of sophistication’ standards to ensure AI providers and users bear appropriate risk levels.

- Supervisory enhancements aimed at guaranteeing adequate IT resources and personnel for oversight, boosting analytical capabilities, reinforcing enforcement, and promoting cross-border collaboration.

Authorities must conduct thorough analyses to obtain a better understanding of AI’s influence and applications within the financial sector.

The current geopolitical climate amplifies the urgency of this issue. Failure by authorities to keep pace with AI’s integration in finance will hinder their ability to identify emerging systemic risks, leading to more frequent financial stress that necessitates costly public interventions. Given the global nature of AI, it is imperative for governments to collaborate on developing international standards to prevent actions in one jurisdiction from undermining stability in others.

See original post for references